We’ve finally arrived at the third and final installment of this riveting blog series. While some may be sad at the disappearance of extra sleep they received from reading this (my prose is usually a great cure for insomnia), in this blog, we’ll be covering the shiny new DCNAUTO specialization and technologies near and dear to my heart. Just like the blog on AUTOCOR and ENAUTO 2.0, I hope that this will help clarify the reason and intent for the large reworking of the exam topics to assist and aid you in your studies.

A fork in the road

The original DCAUTO exam had the “shortest” list of exam topics (based solely on my unscientific analysis of the amount of text on a PDF), but that doesn’t mean that the exam was simple. It covered a broad set of technologies with disparate terminology and spanned several (typically) separate teams (server/compute teams usually are separate from the datacenter networking teams).

But even if you were in an organization that had ACI and UCS, most of the time you work with only one technology or the other, not both. This complication was only exacerbated by the fact that the Unified Computing System (UCS) Manager Platform Emulator (UCSM-PE) could not be connected to Cisco Intersight; only certain builds which were available only to special teams like Cisco DevNet for their Sandbox could do so.

This lead to a massive internal decision: How do we provide an automation certification that focuses on the datacenter, covers the network technology available today, includes platforms and devices, and covers the evolving realities in the datacenter (like Kubernetes and containers)? We had some tough choices to make, but the result is the DCNAUTO 2.0 (note the “N” for networking)

Give it to me straight, what has been removed from DCAUTO?

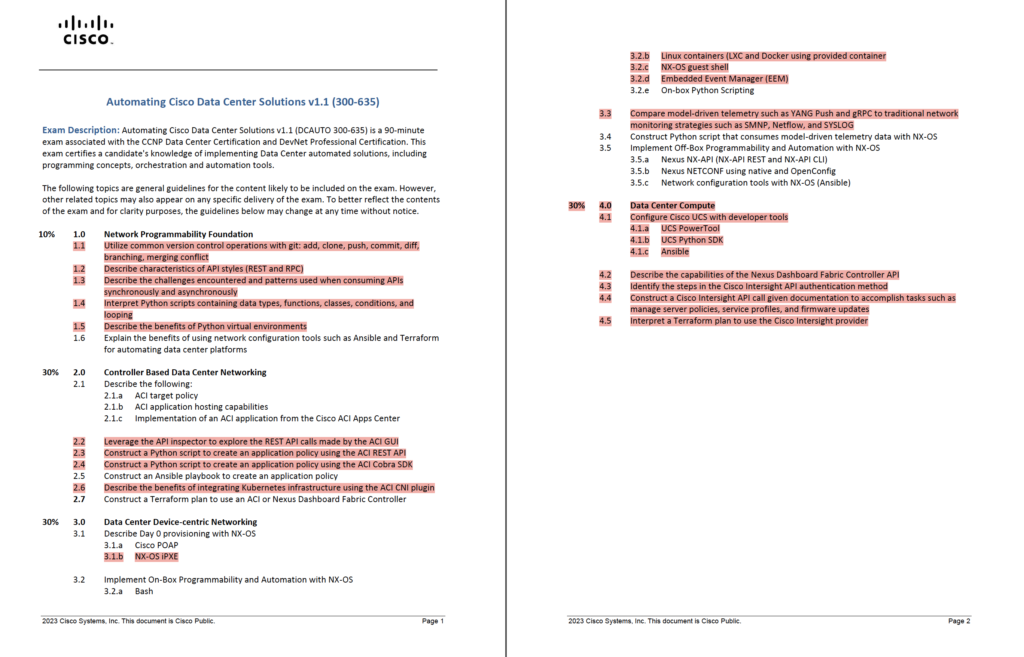

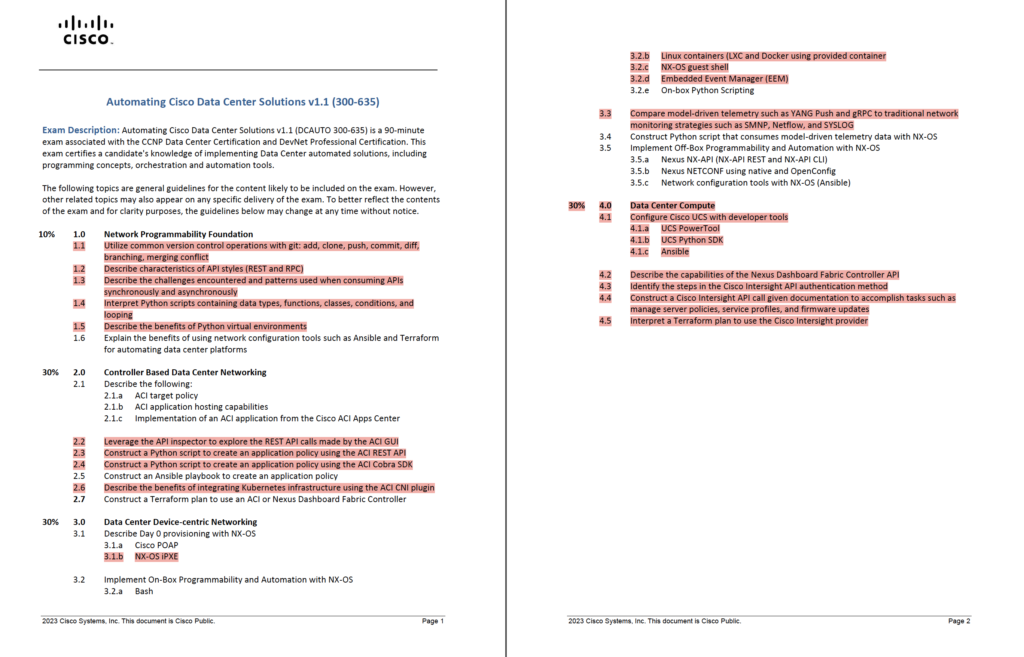

Based on this image, you can see that a large chunk of the original blueprint has been removed/changed in some way(the highlighted sections). In some cases, the topics were removed for the same as they were in ENCOR 2.0; topics like Git, basic APIs, or Python virtual environments were removed because either (a) they are assumed knowledge (b) covered in the core exam or (c) can be replaced with other technologies that may work better with larger workflows (e.g. development inside of a container with mapped volumes can replace virtual environments within Python).

Within domain 2.0, we removed many of the specific API and SDK tasks as they pertain to ACI. While these two methods of automation are still valid, much of the development and integration effort within the datacenter has been focused on Infrastructure as Code (IaC) tools. Being able to automate platforms and technologies with tools that have multi-platform support is important because these datacenters are increasingly heterogeneous. So understanding how to use these tools within the network infrastructure becomes a critical skill.

Domain 3.0 received a light touch of changes, mostly focused on refining and trimming down superfluous device-centric automation and app-hosting methods. While these capabilities are still built-in to our vast datacenter switching portfolio, we tried to focus on the most common use-cases and technologies. Remember, the focus of the new blueprints is to create practicality and applicability into exams, so we had to trim away some of the esoteric or less used features and functionality.

And you dropped compute?!

Yes.

I guess you’ll be looking for a reason on this one, too. Believe me, it wasn’t an easy decision. We went back and forth on this and there were strong arguments to both sides, but ultimately, more often than not, the compute and server teams are completely different than network infrastructure teams, and the practitioners within those teams had vastly different skillsets, making the crossover to be that much more difficult.

Rather than weakening the depth of the test (and the practical applications gained from it) to support added breadth, we decided to drop the compute automation completely. I can already hear the sighs of relief from network automation folks, but I know there are a few folks that will miss the inclusion of Intersight and the UCSM APIs (my former compute Developer Advocate counterpart included).

Enough about what was dropped, what do we need to study?

Within the datacenter, there are a few key technologies that we chose to focus on. As with the AUTOCOR and ENAUTO 2.0, reference the top paragraph of the exam topics list to get an understanding of the in-scope platforms. These platforms shouldn’t come as a surprise, but it’s helpful to set context around your studies.

Infrastructure as Code (IaC)

The datacenter must be:

- Agile

- Multivendor

- Even multicloud

This means click-ops or individual automations for different platforms won’t always be accepted. The unifying factor to all of this is something like Ansible or Terraform, wherein the syntax across platforms and clouds is the same and the only difference is the modules/collections or providers in use.

The DCNAUTO exam reflects this, as 25% of the exam falls within the IaC domain. This requires you to be familiar with the tools and control features as well as the platforms covered by the blueprint.

On-box automation and programmability

With the size and scale of modern datacenter networks, platforms are often used to manage the fabric. However, there may be either specific network automation solutions or day 0 provisioning that dictate a “box-by-box” process. Because of this, we’ve included specific exam topics to validate a learner’s knowledge around these “network element” automation tasks in Domain 3.

In terms of specific network element programmability, we’ve included:

- NETCONF support, as YANG models such as OpenConfig are used in large, potentially multi-vendor or web-scale datacenters, as it normalizes configuration across a variety of devices

- Familiarity with NETCONF and ncclient, which can be used to deliver XML-structured payloads to a device via code written in Python

- Understanding the day-0 provisioning of a device outside of the use of a controller, and the on-box programmability methods available within the Nexus platform

- Knowledge around NXAPI and the flow of creating bespoke templates (which can then be applied as policy) within Nexus Dashboard rounds out the domain

Operations (including Linux Networking!)

One of the larger shifts (across all new CCNP-Automation exams) has been the focus on operational aspects of an automation solution. After all, what good is deploying a change without understanding the impact of that change on the network? This is no different within the datacenter and some would argue that it’s more important; datacenters are finely tuned instruments to move data very quickly from place to place. If it doesn’t work, it’s generally costing large sums of money.

In this exam, we’ve not so much “removed” topics, but shifted them in complexity. The original DCAUTO exam had elements that touched on model-driven telemetry and understanding subscriptions to data., including next-generation protocols like gNMI and gRPC. We also include digital twins and pyATS validation, as we have in other exams. Not to be forgotten, we also cover the ability to retrieve health information via Python against devices as well.

Finally, we also added the requirement to troubleshoot packet flows from Linux-based hosts running containers. We all know that containers are the new VMs, but the hosts running those containers don’t use the same tools and terminology as a Type-1 Hypervisor; we must understand how Linux networking works and how it is configured.

This includes how interfaces, subinterfaces, and bonded interfaces are created, as well as how standard bridges are defined and the relationship between virtual Ethernet (veth) interfaces at the host level and interfaces defined within the container runtime. These skills are no longer optional and we felt it important to understand them well enough to fix them when they break.

We had to toss in some AI, too

Just like with the rest of the professional automation specializations, some AI needed to be included within the exam topics list; it’s being talked about everywhere and our certifications should be no different.

- Understanding the security implications of using AI within the datacenter is important to protect the vast amounts and value of that data. Here there could be unintended consequences around data exposure and as a vector for exfiltration.

- As agentic AI becomes mainstream, understanding how those agents connect to various platforms, devices, and controllers is a baseband task; something that everyone should understand.

- With the prevalence of automation and orchestration within the datacenter, describing and understanding how generative AI can be used to accelerate prototyping and iteration over network automation solutions will no longer be an optional skill. It should validated for any automation professional.

Bringing it all together

Through this blog, and the previous ones on the AUTOCOR and ENAUTO 2.0, I hope you’ve gained a little bit more insight into the certification and the specific exams (both core and concentration). This isn’t just related to the exams and topics themselves, but also the mindset shift and different approach in creating the exam topics list, moving from software engineers that are learning “network” to network engineers that are learning “automation.” It sounds subtle, but the outcome can be quite different. Through this difference, we hope that you find that the new exams align to your automation work in a much more impactful way.

As always, happy learning! If you have any questions, please contact me on X (@qsnyder) or through the Cisco Learning Network message boards.

Effective February 3, 2026, the 300-635 DCNAUTO exam will be updated to v2.0 and renamed, “Automating Cisco Data Center Networking Solutions v2.0.”

Sign up for Cisco U. | Join the Cisco Learning Network today for free.

Learn with Cisco

X | Threads | Facebook | LinkedIn | Instagram | YouTube

Use #CiscoU and #CiscoCert to join the conversation.